In reading for Monday's class discussion, two of the articles -- "News & the news media in the digital age: implications for democracy," by Herbert J. Gans and "Twitter: Microphone for the masses?" by Dhiraj Murthy -- they both made me think back to a very unrelated event: My mother's only comment to me on Facebook. Ever.

While I'm sure the goal was to think of lofty ideas about technology helping foster democracy around the world, or giving a voice to millions who would have otherwise had no way to publish their ideas, I think about how technology hasn't really

What sparked this was my mom's only post ever onto my Facebook status. She's not at all too sure about Facebook, accidentally signing up through both her work and home email addresses (but we worked that out over Christmas break one year). So to my surprise one Veteran's Day where I posted a little shout out to all my veteran friends and spouse, out of nowhere mom chimes in and says not to forget "your dad's brother, Harry, who served in the Air Force." Wow. Well, one: I never met my "dad's brother, Harry." He was killed when my father was only 12. Secondly, how in the world did my mother decide that that was the day to finally login to Facebook and start reading her newsfeed?

(Side note: I was going to track down this post and put a screen shot of it here, but with the horrid new Facebook timeline, I can't find it. So instead, you get this picture of my cat Lou. More on him later.)

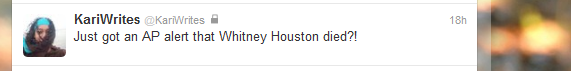

So while I'm now armed with a Twitter feed where, in 140 characters, I can spread news, thoughts, ideas, and my musings, it doesn't really provide me the opportunity to do something or say something I wouldn't have said before. And while I agree that it does give a platform for people who need to make political statements or news events known, it doesn't give them the impetus - that's what we're born with and comes from a much more human source. Twitter doesn't give a voice as much as it gives a microphone to the masses. The same goes for Facebook. I don't post anything I wouldn't say to most friends just because I'm armed with an Internet connection, it just allows me to more easily interact with a group who is geographically diverse.

My mother's post proved to me that our intentions and inclinations as human communicators likely won't change with the medium. No matter what story I was telling, Ma would be sure to pipe in with an element she thought I was missing, or elaborate on a family connection I didn't explain fully. Even in her one foray into Facebook Newsfeed-land, she responded as she would have if we were in the same room.

Facebook has done one thing for me -- it's helped point out my failings as a pet owner. Lou had to have a tooth pulled on Friday, something I feel that I should have noticed a few weeks ago. By looking through Facebook pictures on Friday while he was at the vet, his always-present snaggle tooth was shorter than what I noticed Thursday night, which prompted the early morning vet run. In Facebook pictures, I had evidence that I was an unobservant pet owner. Lou's fine now. He's getting his medicine, kitty "advil," and a lot of soft treats. Thank you, Facebook. Thanks for allowing us to pass along guilt - be it in the form of motherly asides or pet-owner failures.